Article

Continuous Feedback to Improve AI Results

Using AI tools enables many customers to manage millions of words quickly and efficiently. However, at large volumes, AI is prone to errors, mistakes, and sometimes flat-out laziness. This is why our pre-translation setup and continuous feedback processes are important: to spot and fix where the AI makes mistakes.

This is our Quality Review process, that applies similar principles such as reinforced learning using human feedback but optimising outputs rather than retraining models.

A method that improves the content on a site, continuously

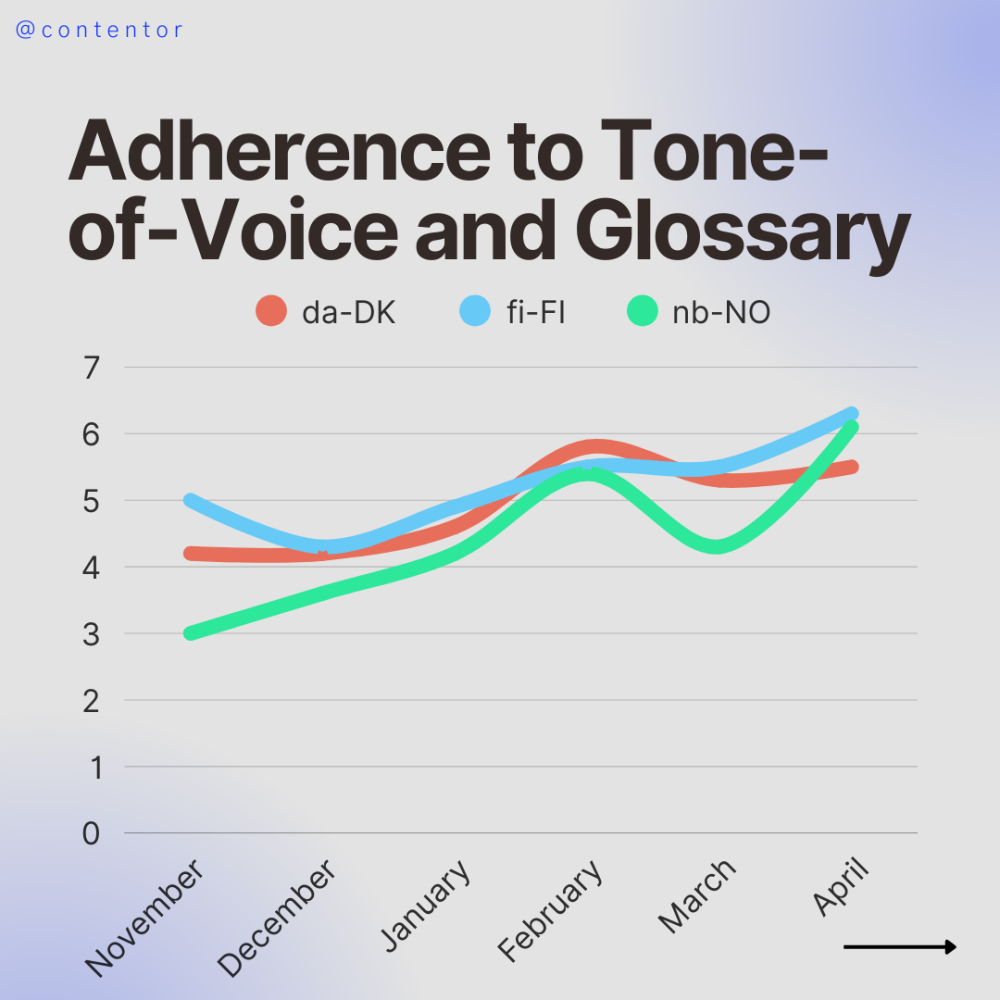

The data you can see here are the last 6 month of improvement, for one of our customers.

By utilizing a combination of AI setup, human linguists and SEO expertise, we can enhance the content for this brand in various ways.

We look at different scoring for the text, based on both readability, which has proven important for both SEO and conversion optimization. But also Adherence to grammar, which is important for the experience for visitors.

And we look at Tone-of-Voice, which is important for brands that want to have the same messaging across multiple countries and markets.

The scores are on a scale from 0 - 7, with 7 being the highest possible.

With each review, the system is improved, allowing it to perform better. With our API it is also possible to go over previously translated content, improve it and push it live again. Automatically

As can be easily seen, the Quality Reviews lead to general improvement across the board, with certain languages having improved over 100% since the initial setup of the AI flow.

This is why we believe that AI, when combined with human insight and expertise, creates a powerful and effective partnership. Using AI out of the box makes this a lot harder, as the feedback is what improves the system och setup.

Do you want to know more? Reach out to us.